Have you ever encountered a

webpage missing a description snippet in search results, even though it seems

relevant? There's a chance the culprit could be robots.txt – a file crucial for

SEO but sometimes causing unintended blockages.

What is the Problem?

In simple terms, robots.txt

acts as a website's gatekeeper, telling search engines like Google which pages

they can crawl and index. When a page is blocked by robots.txt, search engines

can't access it, preventing its inclusion in search results. This significantly

impacts your website's visibility and potential traffic.

Behind the Blockades: Understanding the Causes

Several factors can lead to

pages being blocked by robots.txt:

1. Misconfigured Directives: Even a single typo in the

"Disallow" directive within your robots.txt can block entire sections

of your website.

2. Accidental Blocking: Including sensitive pages like logins is understandable, but

accidentally adding relevant content can be detrimental.

3. Outdated Instructions: Old robots.txt files from website

migrations or plugins might still exist, causing unintended blockages.

4. Conflicting Directives: Overlapping "Allow" and "Disallow"

directives create confusion, potentially blocking pages you want indexed.

5. Incorrect Wildcards: Using wildcards like "*" too broadly can unintentionally

block desired URLs.

6. Plugin Interference: Some plugins might generate robots.txt rules that unintentionally

block specific pages.

7. Server-Level Restrictions: Server configurations or access control

lists can also restrict search engine access, mimicking a robots.txt

block.

8. Dynamically Generated Pages: Search engines might struggle to crawl

dynamically generated pages if robots.txt instructions aren't adapted.

9. Targeting Specific Search Engines: Blocking specific bots like Bing with

robots.txt can impact your broader search visibility.

10. Misunderstanding Robots.txt Functionality: Using robots.txt to hide

content from search results isn't effective – search engines can still find it

through backlinks.

The Impact on Your Website

Pages blocked by robots.txt are

essentially invisible to search engines, leading to various negative

consequences:

1. Reduced Search Visibility: Blocked pages can't rank in search

results, significantly impacting your website's overall organic traffic.

2. Missed Ranking Opportunities: Valuable content remains

hidden, potentially losing out on relevant keywords and attracting potential

customers.

3. Inconsistent Search Results: Pages might appear in results without

descriptions due to robots.txt blocking, creating a poor user experience.

4. Wasted Website Efforts: Valuable content creation and SEO

optimization efforts become ineffective for blocked pages.

Solving the Blockade: Unlocking Your Website's

Potential

Fortunately, solving the

"blocked by robots.txt" issue is often straightforward. Here's how:

1. Identify Blocked Pages: Use tools like Google Search Console's URL

Inspection tool or online robots.txt testers to pinpoint blocked URLs.

2. Review Your robots.txt: Open your robots.txt file (usually located

at your website's root directory) and carefully review the directives.

3. Remove Unnecessary Blocking: Look for typos, outdated

rules, or overly broad wildcards causing unintended blockages.

4. Adjust Conflicting Directives: Ensure "Allow" and

"Disallow" directives work in harmony, prioritizing your

indexing preferences.

5. Check Plugin Interference: Disable or update plugins that might be

generating conflicting robots.txt rules.

6. Address Server-Level Restrictions: If necessary, consult your hosting

provider to check for server-level access limitations.

7. Handle Dynamic Pages: Consider using sitemaps and appropriate robots.txt directives to

guide search engines to dynamic content.

8. Review Search Engine Targeting: Ensure you're not unintentionally blocking

desired search engine bots.

9. Validate and Submit Changes: Use robots.txt testing tools and resubmit

your sitemap to search engines after making changes.

10. Monitor and Maintain: Regularly review your robots.txt file and update it as your

website evolves to prevent future blockages.

Example: Imagine you have a

blog with helpful guides that accidentally got blocked by a robots.txt

"Disallow: /blog/*" directive. By identifying this rule, removing the

wildcard, and resubmitting your sitemap, you'll unlock these valuable pages for

search engine indexing and potential traffic.

FAQs

on "Pages Are Blocked by robots.txt":

1. What is robots.txt and why does it block pages?

robots.txt is a file on your

website that helps control which pages search engines like Google can crawl and

index. You might use it to block pages containing sensitive information,

duplicate content, or pages under development. When a search engine encounters

a "Disallow" directive in your robots.txt for a specific page or

directory, it won't crawl that content.

2. How can I tell if a page is blocked by robots.txt?

Several methods can help you

identify pages blocked by robots.txt:

·

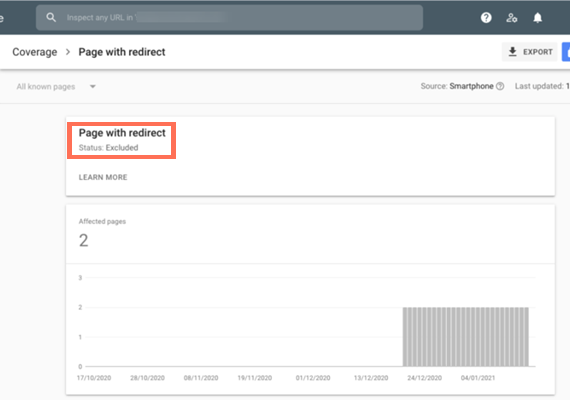

Google

Search Console: This free tool provides a "Coverage" report

showing URLs with crawling errors, including those blocked by robots.txt.

·

Online

robots.txt testers: These tools analyze your robots.txt file and highlight

any URLs it might be blocking.

·

Checking

the robots.txt file directly: Access your website's root directory and

locate the robots.txt file to see its directives.

3. What are the risks of blocking pages with robots.txt?

While robots.txt offers

control, it's important to understand potential drawbacks:

·

Unintended

blocking: Misconfigured directives can accidentally block important

pages, hindering search visibility.

·

Content

still discoverable: Even blocked pages might appear in search results if

linked to from other sites, though without a description.

·

Alternative

methods for exclusion: For complete exclusion from search

results, consider password protection or the noindex meta tag.

4. How can I fix issues with blocked pages?

If you find important pages

blocked by robots.txt, take these steps:

·

Identify

the blocking directive: Examine your robots.txt file or use a testing tool

to pinpoint the rule.

·

Remove

or adjust the directive: If unnecessary, remove the rule

entirely. If needed, modify it to allow access to specific URLs

within the blocked path.

·

Submit

your sitemap to search engines: Help search engines rediscover the changed

pages by submitting an updated sitemap.

5. Should I block all dynamic pages with robots.txt?

Generally, it's not recommended

to block all dynamic pages. Search engines need to understand your site's

structure and content to index it effectively. Blocking dynamic pages could

hinder indexing and search performance.

6. Does using robots.txt prevent content scraping?

robots.txt primarily guides

search engines, not web scrapers. Scrapers may still access blocked content,

although its effectiveness depends on the scraper's sophistication. Consider

other methods like password protection or legal measures for stronger

protection.

7. Can I block specific search engines with robots.txt?

Yes, you can use user-agent

directives in your robots.txt file to target specific search engines or bots.

However, exercise caution as blocking major search engines significantly

impacts your discoverability.

8. What about using robots.txt to hide pages from competitors?

robots.txt isn't effective for

hiding content from competitors. They can still discover pages through other

means, and search engines might still index blocked pages based on backlinks.

9. Does robots.txt affect how my website appears in search results?

Yes, blocking important pages

with robots.txt can prevent them from being indexed and appearing in search

results. This can negatively impact your website's visibility and search traffic.

10. How can I optimize my robots.txt file for SEO?

Follow these practices for

SEO-friendly robots.txt:

·

Allow

crawling of essential pages: Ensure search engines can access and index

important content.

·

Use

specific directives: Avoid blocking entire sections unnecessarily; target

specific paths or URLs.

·

Test

and review regularly: Check for unintended blocking and update your

robots.txt as your website evolves.

Social Plugin